-

Posts

719 -

Joined

-

Last visited

-

Days Won

5

Content Type

Profiles

Forums

Events

Gallery

Blogs

Posts posted by bushindo

-

-

I get similar numbers as bonanova and prime.

I did 1000 trials of playing 1000 games per trial.

Average final bankroll ~ $2E10

Median final bankroll = $0.49

Number of winning trials = 465

If you're on a Windows machine with a Java JDK installed (free to download from Oracle if you don't have it already): copy the code below and paste it into a txt file called LetItRide.java, then on a command prompt in that directory type javac LetItRide.java to compile it, then type java LetItRide to run it.

import java.util.Random; import java.util.Arrays; class LetItRide { static int trials = 1000; static int games = 1000; public static void main(String[] args) { double[] bankroll; double[] multiplier = {0.7, 0.8, 0.9, 1.1, 1.2, 1.5}; int trialnum, gamenum; double sumOfTrials; int winningTrials; Random randnums; bankroll = new double[trials]; sumOfTrials = 0; winningTrials = 0; randnums = new Random(); for(trialnum=0; trialnum<trials; trialnum++) { bankroll[trialnum] = 1; for(gamenum=0; gamenum<games; gamenum++) { bankroll[trialnum] *= multiplier[randnums.nextInt(6)]; } sumOfTrials += bankroll[trialnum]; winningTrials += (bankroll[trialnum]>1) ? 1:0; } System.out.println("Average final bankroll: "+sumOfTrials/trials); Arrays.sort(bankroll); System.out.println("Median final bankroll: "+bankroll[trials/2]); System.out.println("Numer of winning trials: "+winningTrials); } }Thanks for the code. I think I see what is causing the discrepancy between the simulation results. I made a mistake in transcribing the game probabilities...

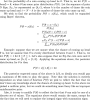

The correct game probabilities are (.7, .8, .9, 1.1, 1.2, 1.5), but I used (.7, .8, .9, 1, 1.2, 1.5), which causes the discrepancies in results. Let ON be the bankroll at the end of N consecutive games that let the winnings ride. Using the correct game probabilities,

log( ON ) ~ Gaussian( -0.0003470277 * N, 0.2565272 sqrt(N) )

That is, the log of ON is normally distributed with mean -0.0003470277 * N and standard deviation 0.2565272 sqrt(N).

As shown in post #45, we can calculate the probability of ending up ahead for any N using the distribution above. For N=1,000, the chance of winning is 0.4829388, which is consistent with the simulation results from bonanova, prime, and plasmid. For N=1,000,000, the chance of winning is 0.0880612. If N= 100,000,000, then the chance of ending up with a larger bankroll than 1 at the end is 5.351129e-42.

-

This puzzle seemed to merit an analysis that went further than I had taken it.

Especially since most of my analysis was dead wrong.

Excellent insight, bonanova. The idea of using log is brilliant. However, my coding (and theoretical results) disagree with the simulation shown you and prime.

Using bonanova's insight of using log and the central limit theorem (see attached pdf for detail), it is easy to construct the probability of winning (larger bankroll at the end) for any number of games N if we use the strategy of letting the winning ride.

Incidentally, the theoretical result using the gaussian curve happens to answer prime's inquiry showing that the chance of winning approaches 0 as the number of games approach infinity. It also provides a statistical distribution for the case where N = 1000.

If N is 1000, then my simulation and theoretical calculation agree that the chance of winning is about 2.12%. However, both bonanova and prime agree that the chance of winning is about 48% or so.

I'm not sure what is causing the difference in the simulation. (And the fact that both bonanova and prime have the same result indicates that there might be an obvious logic that I might have missed). Perhaps either bonanova or prime could elaborate on how the simulation was done?

-

-

Winoc sells four types of products. The resources needed to produce one unit of each and the sales prices is listed below:

Resource Product 1 Product 2 Product 3 Product 4

Raw Material 2 3 4 7

Hours of labor 3 4 5 6

Sales Price ($) 4 6 7 8

Currently, 4,600 units of raw material and 5,000 labor hours are available.To meet customer demands, exactly 950 total units must be produced. Customers also demand that at least 400 units of product 4 be produced. What is the solution that maximizes Winoc's sales revenue?

Here's my try

200 of product 1, 0 of product 2, 350 of product 3, 400 of product 4

-

Let a, b, c, and d stand for the fraction of a project that A, B, C, and D can do within each day, respectively.

It is straightforward to construct a linear equation

Bx = y

where B is a 4x4 matrix given from the OP, and y = (1,1,1,1)'. Solving for x, we get the following values for A, B, C, and D, respectively.

[1,] 0.02614608

[2,] 0.03372183

[3,] 0.04013209

[4,] 0.01705517

Units are project/day. From here, it is simple to compute how much each individual contributes to a project.

I like your answer. It is interesting how in America this problem is solved differently from my home country.

I'm intrigued. How do people from your country solve this problem?

-

essentially, i am asking how much of the duration is caused by each individual on the three man team if we assume that a person is just as productive no matter who they work with

So, by your later question, are you asking for how long each individual would take to complete the project working on his/her own? [edit from here] This would then, of course, make that proportional payment easier, using [actual time]/[individual time] to determine the proportion of total payment each individual should receive.

How many days does each person contribute to the total project days?Anna (A), BIll (B), Cindy ©, and Dante (D) work on a project.

Together, A, B, and C can complete it in 10 days.

Together, B, C, and D can complete it in 11 days.

Together, C, D, and A can complete it in 12 days.

Together, D, A, and B can complete it in 13 days.

Who is the best performer? Prove your answer.

In each scenario, 1 worker is excluded and everyone else contributes.

Therefore the best worker is the one without whom the work takes the longest. That would mean C is the best worker since without him it takes 13 days to complete the task.

Individuals don't contribute to the project's time.

A can do a project, alone, in a days, but a does not increase the time of a project.

Rather, 1/a contributes to the reciprocal of the project's time.

1/a +1/b+1/c = 1/10, etc.

Allow me to rephrase cause I disagree: Including someone on a project and excluding someone else directly affects the amount of days a project takes. So in terms of making a three man team we can determine the day load attributed to each person (as well as rank them by productivity). In terms of proportional pay for effectiveness this is very important as we in manufacturing projects pay individuals incentives based on their individual contribution to group projects.

Let a, b, c, and d stand for the fraction of a project that A, B, C, and D can do within each day, respectively.

It is straightforward to construct a linear equation

Bx = y

where B is a 4x4 matrix given from the OP, and y = (1,1,1,1)'. Solving for x, we get the following values for A, B, C, and D, respectively.

[1,] 0.02614608

[2,] 0.03372183

[3,] 0.04013209

[4,] 0.01705517

Units are project/day. From here, it is simple to compute how much each individual contributes to a project.

-

Anna (A), BIll (B), Cindy ©, and Dante (D) work on a project.

Together, A, B, and C can complete it in 10 days.

Together, B, C, and D can complete it in 11 days.

Together, C, D, and A can complete it in 12 days.

Together, D, A, and B can complete it in 13 days.

Who is the best performer? Prove your answer.

In each scenario, 1 worker is excluded and everyone else contributes.

Therefore the best worker is the one without whom the work takes the longest. That would mean C is the best worker since without him it takes 13 days to complete the task.

-

1

1

-

-

:

Make the star cut: 3 x 5 around plus the middle=16 ,

two points added cut though 5 chunks with red

cut through 7 chunks with green and cut through

4 chunks with blue.

... it takes 7 points to get 32 slices.

Correct. The star awaits the formula.

Recursive formula

Let C(N) be maximum number of chunks as a function of the number of points N. It is easy to see that,

C(2) = 2

C(3) = 4

C(4) = 8

And a recursive expression for C(N) is

C(N) = C(N-1) + (1/6)(N-1)(N2 - 5N + 12)

Note that C(N) is the maximum number of chunks. For some N, getting the maximum number of chunks may require the points to be spaced non-equidistant from each other.

-

The findings of the Huygens probe indicate that Titan (Saturn's largest moon) has a nitrogenous atmosphere that periodically produces rain onto that moon's surface. Titan and Earth are the only known heavenly bodies with liquid rain. But given its hostile temperature of -180oC Titan's rain is not water, it's liquid methane. But enough of the cold facts.

The exciting part of this puzzle is that in 2004 you were given a large supply of beef jerky, a warm parka, and the job of being Titan's chief in-person, feet on the ground, up close and personal, weather observer. Upon your recent return, you reported your findings on Titan's rain activity. Let's call the days on Titan that it did not rain "sunny" days, even with the constant nitrogenous smog. (You grew up in Los Angeles.) You found that sunny days on Titan were followed by another sunny Titan day 29 days out of 30, (pss = 29/30), while rainy days were followed by another rainy day with probability prr = 0.7.

Like many heavenly satellites, Titan's rotation is tidally locked to its orbital period (16 Earth days.) If you were on Titan for say 9 Earth years, on about how many Titan days did you observe rain?

Here's my answer

So, given that we start with a clear day, it would take an expected 1/( 1/30) = 30 days to get a rainy day.

Once we have a rainy day, it would take an average of (1/.3) = 3.333333… days until we get a clear day again.

So, on average we would have (3 + 1/3) rainy days for every (33 + 1/3) days, which means that the weather on Titan is sunny 90% of the time.

-

It doesn't matter what random number generation method you use, the results will be the same. And there's no need to code the program if you can tell by looking at it what the results would be.

I think I see where we agree and where we diverge now. This two-envelope paradox has two variants,

A) There are two envelopes, both of which are unopened. We reach the same conclusion on this one.

I think we both agree on this one. Your (experiment) and (Bayesian posterior expectation) both agree that when we have two unopened envelopes, switching will not gain anything regardless of prior probability distribution. So we should not switch in this situation.

B) One of the envelope is opened and has $1000. This is where we disagree

My stance on this is that seeing the $1000 impart some information, which may affect your decision, depending on your prior information ( see for details).

I think your stance on this is that the $1000 does not give any information (from : "I would counter that the precise value that the player finds when he opens the envelope is arbitrary (you could multiply all of the s and g terms by any value you like) and would not affect the conclusions of the experiment."). That implies that the player should not switch regardless of what value he finds in the opened envelope.

But consider the situation where the uncle can only put monetary amounts that is discrete in the number of cents in the envelope. That is, the envelopes may hold $10.10, $9.01, or $10.99, but never any amount of finer precision like $10.9991 or $100.28631. Let's say that we open the first envelope and find $9.99.

The no-information-gained argument would say don't switch. But we gained some information from seeing the value of $9.99. Obviously, the envelope can not be the larger amount, since $9.99 is odd in the number of cents, so we should switch. If we change our strategy depending on what we see, then the value of the opened envelope is no longer arbitrary and irrelevant.

-

The experiment is fairly simple. Randomly generate however many "smaller" envelopes you want {s1, s2, s3 ... sn} and for each of them generate a matching "greater" envelope {g1, g2, g3 ... gn} where the value in gx = 2sx. Let the value of all sx and gx be small relative to the bank account. The participant is given a random envelope and given the choice of whether or not to switch -- if he was originally given an envelope sx then he will switch to envelope gx, and if he was originally given an envelope gy then he will switch to envelope sy. Now compare what happens if the participant takes a strategy of switching vs a strategy of staying with the initial envelope.

The probability distribution is random, and results should generalize to any probability distribution that you face.

One could argue that it doesn't fit the OP because the player isn't presented a value of $1000. I would counter that the precise value that the player finds when he opens the envelope is arbitrary (you could multiply all of the s and g terms by any value you like) and would not affect the conclusions of the experiment.

Can you clarify the part highlighted in red? Do you mean specifically to generate N random numbers from the uniform distribution between, say, 0 and L?

If I'm writing code for this experiment, I can't generate a random number without telling the computer precisely which probability distribution to use (and the corresponding distribution parameters). Most computer programs, for instance, will allow one to generate a random number uniformly between [0, L], but then you will need to supply the value for the upper limit L. (Reverend Bayes, is that you?)

Randomness comes in many forms (e.g., normal, uniform, exponential, etc. ) and I don't think it is possible to generate random numbers without specifying which probability distribution we are working with.

-

Brilliant! We will add your extra restraint. Find a way to best maximize your minimum score while keeping the perceived share as balanced as possible.

If we want to maximize the minimum perceived percentage plus making sure that no one thinks someone else is receiving more money, then here's an approximate strategy

Albert: Wardrobe + 3906852.6

Eric: Piano + 3905490.1

Theresa: 3906902.6

Sonya: Ring + Appliances + House + 280754.6

Eric and Sonya each thinks that he/she is getting 26.75% of the old man's wealth. Albert and Theresa think that they are getting 27.9% and 28.94%, respectively.

However, the most important thing is that from each person's point of view, he/she has the most money of all the siblings.

-

I can think of an "experiment" that I believe would be considered satisfactory by most people, that doesn't depend on the probability distribution of how much money is in the smaller or larger envelope.

I'd love to hear about this experiment that does not depend on the probability distribution of how much money is in A and B. My feeling is that Reverends Bayes is hiding somewhere, possibly heavily disguised, in the set-up. But I may be wrong, I often am.

-

What is the best means to dividing these items up? Let's define Best as the approach that gives the greatest benefit to the recipients. The submissions will be judged by examining the highest percentage over the expected individual's share of the individual who made the least over their expectation.

Can you elaborate on the bolded part? I'm not sure that I can parse that correctly.

There are many ways to determine a 'best' answer in this question. Both you and Pickett found effective answer and there are in fact more answers that would work in providing everyone at least 25% of the fair share of the goods and money. I am now seeking the answer that provides everyone the most profit. I do not want the average percentage of perceived benefit from the will, I want to award the 'best' solution to the one who can give the most to the person who received the least.

For example: (I am making these percentages up by the way)

Remember each person expects to receive 1/4 of the value of the old man's wealth

strategy 1's allocation strategy gave the following outcomes

person 1=27%

person 2=36%

person 3=40%

person 4=30%

strategy 2's allocation strategy gave the following outcomes

person 1= 30%

person 2= 29%

person 3= 28%

person 4= 29%

though the average gain in strategy 1 is 33.25% which is higher than strategy 2, person 1 only made 2% more than expected so this strategy is not preferred when compared to strategy 2 since its lowest recipient got 28% of the perceived wealth or 3% more than expected. So in these hypothetical cases, solution number 2 is perceived as better since the minimum beneficiary is better than the other cases minimum beneficiary.

**and of course these percentages are perceived percentages of value they placed on the old man's wealth.

If you are trying to maximize the minimum perceived percentage of the value, then you can divide the property in the following manner

Albert: Wardrobe + 3,855,971

Eric: Piano + 4,019,776

Theresa: 3,718,230

Sonya: Ring + Appliances + House + 406,020

From each person's point of view, he/she is getting 27.534% of the old man's wealth.

I think one more constraint that you will need to place on the division is that from each person's point of view, no one else is getting more money than he/she is. From the case above, Theresa is getting 27.5% of the old man's wealth from her point of view, but she thinks her brother Eric is getting almost 300,000 more than her (and he did nothing to deserve this except for appraising the house higher). Human nature being what it is, that is a prime cause for litigation.

-

What is the best means to dividing these items up? Let's define Best as the approach that gives the greatest benefit to the recipients. The submissions will be judged by examining the highest percentage over the expected individual's share of the individual who made the least over their expectation.

Can you elaborate on the bolded part? I'm not sure that I can parse that correctly.

-

I wrote a quick Java program to generate the total amount gained from switching or not over 100,000 trials with an envelope value of 1,000. Although the random number generator isn't entirely random, I thought it might help nonetheless.

import java.util.Random;

public class ProblemTest {

public static void main(String[] args) {

Random gen = new Random();

System.out.println(100000000);

long total = 0;

int otherenv = 0;

for (int i = 0; i < 100000; i++) {

otherenv = gen.nextInt(2);

if (otherenv == 0) {

total += 500;

} else if (otherenv == 1) {

total += 2000;

}

}

System.out.println(total);

System.out.println((double) 100000000 / total);

}

}

Total from not switching: 100,000,000.

Total from switching: 124,854,500.

The ratio: 0.8009322851799494.

I think when you code this problem as plasmid suggested, you have to be careful to specify what kind of random distribution you are drawing from since that essentially will define your prior assumptions about A and B. Most random number generator have an explicit upper range, so it might be a problem to sample uniformly from a infinite real line.

Morningstar: what would happen if you changed your program so the amount of money in the first envelope was random? And would that prove that it's always better to switch from whichever envelope you're looking at?

Bushindo: It's certainly better to not switch if you know that there is a ceiling for how much money could be in an envelope and you see an envelope containing more than half of that. But the problem doesn't make any mention of a ceiling. And it may very well be that you don't know how much money is in the bank account, or even if you did then you know that the amount of money in the envelopes is small compared to the size of the bank account but not precisely how small.

That might lead one down the road of looking for a probability distribution with an interesting property. But a complete answer to the question should take into account that the probability distribution could be anything.

I think the discussion should be about what prior distribution is more representative of the puzzle conditions

The discussion above shows that the switching paradox is completely resolved for any well-defined probability distributions pmin( ) and pmax( ). That is, that integral of pmin( ) and pmax( ) over the entire real line must sum up to one, and they can be any well-defined distribution (gaussian, exponential, poisson, etc.). If we have those, then for any x, we can compute the posterior probabilities straightforwardly

P( A is smaller number | A = x) = pmin( x )/[ pmin( x ) + pmax( x ) ]

P( A is bigger number | A = x) = pmax( x )/[ pmin( x ) + pmax( x ) ]

I think the discussion here is precisely that we are not given any information about the prior distribution of A and B. pmin and pmax are our prior distribution, and we must construct an uninformative prior that reflects our beliefs about the distribution of A and B given the totality of circumstance.

I don't think it is reasonable to believe that pmin and pmax are distributed uniformly on the real line. That is because the universe is finite, and last I checked the number of atoms in the universe is less than 10100. So the uncle can not possibly have more than $10100 in the envelopes. That defines a hard upper limit on the envelope values.

I think given this compact support, it is more reasonable to define a non-informative prior for the sum of A and B as the uniform distribution on [0, S], where S < 10100. The solution of the puzzle is then subjective. Given what you know of the uncle, what do you think the value of for the sum S is? If S is 2000 or greater, then switch your $1000 envelope. If not, don't switch.

Suppose we remove the physical limitation on the money, and allow the money values A and B to be uniformly distributed on the real line. In this case, I counter that the resolution of the paradox comes from the fact that the expected value in each envelope is infinite. If both envelopes are unopened, so there is no point in switching since they both have infinite expected value.

-

Love this problem. Here's how I would approach it.

So the assets to be divided are

Piano

Ring

Home

Appliances

Wardrobe

$12,000,000.

Here's a method that guarantees each person be happy from his/her perspective.

* Divide the $12,000,000 into 4. Each person gets 3,000,000.

* Hold an auction among the 4 heirs for each of the other assets. Under perfect scenario, this will result in a sale price slightly higher than the second highest valuation of the property. Give the property to the winning person and then divide the proceeds evenly by 4.

* For instance, let's say we auction the house among the 4 heirs. Sonya would bid $2,600,001 and win the house. She then pays $2,600,001 to the lawyer and get ownership of the house. The lawyer will then divide $2,600,001 into 4 - one portion for each heir.

* Repeat with the other assets.

If one of the people got the house which in Sonya's case means the house is worth 2.6 million should she also get 1/4 of the value back? Sounds like she got an incredible discount.

I don't think that would be a problem

Technically, before the sale Sonya has a 25% ownership in the property, so she should get 1/4 of the proceeds. Essentially, she is paying 75% of the sale price $2,600,001 to the other 3 heirs for their 75% ownership.

As to whether she got a discount. Yes, she did from her perspective. From Albert and Theresa's perspectives, Sonya overpaid but I doubt they would be complaining since they get a bigger share of the pie from their point of view. Eric receives a share that is 25 cents above his valuation, so he should be happy too.

-

An old man left his fortune and goods to his four children. In the will it stated that each item should be divided up fairly so that each person should have an equal share. Unfortunately, the lawyer found that each person appraised the objects' worth differently and no official appraiser was nearby. The lawyer had each of the children complete a simple little informal appraisal of each of the major items. The results are as follows:

Albert:

Piano= 1,000

Ring= $120

Home= 2,000,000

Appliances= $500

Wardrobe= $50

Eric:

Piano= 1,500

Ring= $20

Home= 2,600,000

Appliances= $200

Wardrobe= $10

Theresa:

Piano= 900

Ring= $75

Home= 1,500,000

Appliances= $350

Wardrobe= $10

Sonya:

Piano= 1,150

Ring= $150

Home= 4,000,000

Appliances= $750

Wardrobe= $0

The deceased also had a net value of $12,000,000. How should the lawyer divide up the assets using only this given information to where each person walks away with an equal (or better than equal) percentage of the deceased's worth?

Love this problem. Here's how I would approach it.

So the assets to be divided are

Piano

Ring

Home

Appliances

Wardrobe

$12,000,000.

Here's a method that guarantees each person be happy from his/her perspective.

* Divide the $12,000,000 into 4. Each person gets 3,000,000.

* Hold an auction among the 4 heirs for each of the other assets. Under perfect scenario, this will result in a sale price slightly higher than the second highest valuation of the property. Give the property to the winning person and then divide the proceeds evenly by 4.

* For instance, let's say we auction the house among the 4 heirs. Sonya would bid $2,600,001 and win the house. She then pays $2,600,001 to the lawyer and get ownership of the house. The lawyer will then divide $2,600,001 into 4 - one portion for each heir.

* Repeat with the other assets.

-

The paradox comes from the fact that $1000 is arbitrary.Whatever the amount of money you found in the envelope you pulled let's call it X. Unless X is 1 penny, it's a 50% chance that the other envelope has higher or lower amount. The potential gain by switching is X. The potential loss is X/2. So, by switching you gain X * 1/2 - X/2 * 1/2 = X/4 on average.

Can you gain as much by switching back? Using the same reasoning?

There is a paradox: A gain for switching can be anticipated.

Yet, there is a preferred envelope, and if we initially chose it we should not switch.

Not sure what you mean by "switching back". The 50/50 comes from randomly picking one of 2 envelopes from which we know one has double the money of the other. I don't see a paradox.

Suppose I were to point to an envelope before any were opened, and I said "That envelope contains some amount of money; call it $X. The other envelope therefore must contain $2X or $X/2." You could now argue that the expected earnings from picking the other envelope are $5X/4, so you should choose the other envelope. But in reality, have I actually given you any more information than you already had when you only knew that one envelope contains twice as much money as the other?

I think k-man is right. There is no infinite switching paradox in this formulation. That is because being able to see the envelope amount ($1000) nails down the value of one envelope. In other words, the amount $1000 is not arbitrary. The moment we see it, Reverend Bayes has already entered the room.

So, let's say that we do some simple statistics. Given that the first envelope has value 1000, then the second envelope either has 500 or 2000.

So let's say we switch because the expected value of the unopened envelope is larger than 1000. Now we have an unopened envelope, and the other envelope has 1000. The value of the unopened envelope is still either 500 or 2000. The expected value of the unopened envelope in our possession is still larger than 1000, so switching again is unwise.

Now, if both envelopes are unopened, then that is a different situation. See discussion below.

Let me try to disentangle the paradox using Bayesian statistics

The discussion below will look to put a proper Bayesian prior on the envelope values A and B. We will show that when we have a proper prior, there is no infinite switching scenario, regardless of whether the first envelope is opened or not.

Let the envelope values be A and B. We need some prior distribution on the smaller value and the larger value. Without lack of generality, let's say that the smaller value min(A,B) has a uniform distribution between [0, 10,000]. Consequently, the larger value max(A,B) has the uniform distribution between [0, 20,000]. Their probability density functions are

pmin( x ) = 1/10000 for x in [0, 10000],

pmin( x ) = 0 otherwise

pmax( x ) = 1/20000 for x in [0, 20000],

pmax( x ) = 0 otherwise

So, let's look at the case where we open the first envelope and it is $1000. Given that A = 1000, we need to compute

P( A is smaller number | A = 1000) = pmin( 1000 )/[ pmin( 1000 ) + pmax( 1000 ) ] = 2/3

P( A is bigger number | A = 1000) = pmax( 1000 )/[ pmin( 1000 ) + pmax( 1000 ) ] = 1/3

This coincidentally is the same conclusion that plasmid came to in post #7. The expected value of envelope B is now (2000)*2/3 + (1/3)*(500) = 1500. So we should switch.

However, we see that there is no point to switching a second time. If we compute the expected value of envelope B given that A = 1000, we still see that E( B | A = 1000) = 1500.

What is happening here is that we are gaining some information from having seen the value of A. If A happened to be equal to 15,000, for instance, then from our prior we can calculate the following probabilities

P( A is smaller number | A = 15000) = pmin( 15000 )/[ pmin( 15000 ) + pmax( 15000 ) ] = 0

P( A is bigger number | A = 15000) = pmax( 15000 )/[ pmin( 15000 ) + pmax( 15000 ) ] = 1

In this case, if A = 15000, then A is 100% guaranteed to be the larger envelope. We should not switch.

So, let's consider the case where we don't see the value A or B. In that case, we will need to compute the integral of expected value for switching by integrating the equations above for A between [0, 20000]. If A falls within [0, 10000) then switching would on average gain money. However, if A falls within [10000, 20000], then switching would lose money. If we integrate over the entire range, we get expected value of 0 for switching. So that removes the infinite switching scenario.

Now, let me discuss the infinite switching paradox, which hinges on an improper prior distribution for A and B. That is, it assumes that A and B are uniformly distributed over the entire real line, and that leads to all sort of unintuitive trouble. I propose such a scenario is not possible, since the rich uncle can not possibly have unbounded money amounts in the two envelopes.

-

Here's a follow-up question:

Charles is clearly crushing Bob whenever the the envelopes are 1-3, and he's losing all his equity on the very rare case where the envelopes are 3^29-3^30. So, why can't he simply make a small change to his strategy? Keep his exact same strategy, but in the rare case where he sees exactly $3^29, he also switches. Wouldn't this fix his problem? If not, what's wrong with that strategy?

In that case

Let strategy A be the case where Charles switches only if his envelope has 1. Let's call the strategy where Charles switches whenever he gets 1 or 329 strategy B. There's a tradeoff in expected winning and the number of times that Charles beat Bob's earnings. Strategy B has higher expected winning than strategy A's, at the cost that Charles will beat Bob's earning less often.

-

Alec proposes to Bob and Charles that they will play a game over a 25 year period. Each day while Bob and Charles are away from Alec's house, Alec will prepare two envelopes. Alec will flip a fair coin until the coin comes up tails OR until he has flipped his coin 30 times. Based on the number of flips he makes each day, he will prepare the two envelops as follows:

If the first flip is tails, he will prepare one envelope with $1 in it and the other with $3 in it. If he flips twice, the two envelopes will contain $3 and $9. Three flips will produce envelopes with $9 and $27 and so on. If he somehow flips 30 times, the larger envelope will contain $2.0589113e+14 and the other envelope will contain $6.8630377e+13.

Both Bob and Charles know Alec's procedure and the exact distribution of the possible envelope values.

Once Alec has set the two envelopes, he will randomly place one envelope on the left side of a table and the other on the right side. Both Bob and Charles come to the table, Bob is given the right envelope and Charles the left envelope. They are allowed to privately examine the contents of their envelope. They are then given the chance, privately, to switch. They get to keep the contents of the envelope they end up with each day. If one switches and other doesn't, they will end up with the same envelope that particular day.

Question #1: Bob wants to maximize his expected value over the course of the entire game. What strategy should he use?

Question #2: Charles is less interested in maximizing his EV. He's motivated to end up winning more money than Bob over the course of the 25 years. If he knows Bob's "perfect" strategy, what strategy can Charles use to maximize his chances of ending up ahead of Bob after the 25 years?

If Charles maximizes his chances of beating Bob, he will end up ahead of Bob nearly 100% of the time.

If each player will get the full amount if they happen to choose the same envelope, then

The strategy with the highest expected value for money earned is to always switch, except when the envelope amount is the highest possible- 330.

If Charles is interested in beating Bob's earning most of the time, then he should switch envelope *only if* his envelope amount is 1. Most of the time Charles will earn a few more bucks than Bob. However, Bob's expected winning value is still higher because in the rare case where Bob beats Charles, his earnings will be astronomically larger than Charles'.

-

The wise man on the mountain knew the secret of life. The seeker was granted a 12-hour audience; all he had to do was climb. He began the climb precisely at 8:00 one morning, climbing at the rate of 1.5 mph. After his meeting with the seer, he immediately descended using the same trail, at 4.5 mph, and he reached the bottom at noon the next day. And now what question shall we ask?

A. What is the meaning of life told to the seeker?

B. How long is the trail?

Question B.

Answer for Question A

42

-

There are kn ways in which n dice can each show k or less.

For the highest score to equal k, we must subtract those cases for which each die shows less than k; these number (k − 1)n.

So, k is the highest score in kn − (k − 1)n cases out of 6n.

In other words, pn(k), the probability that the highest individual score is k, is (kn − (k − 1)n)/6n.

The expected value, E(n), of the highest score is the sum, from k = 1 to 6, of k · pn(k).

Hence E(n) = [6(6n−5n) + 5(5n−4n) + 4(4n−3n) + 3(3n−2n) + 2(2n−1n) + 1(1n−0n)]/6n. = 6 − (1n + 2n + 3n + 4n + 5n)/6n.7 - (1^n + 2^n + 3^n + 4^n + 5^n + 6^n)/6^n =

= 6 + 6^n/6^n - (1^n + 2^n + 3^n + 4^n + 5^n + 6^n)/6^n =

= 6 - (1^n + 2^n + 3^n + 4^n + 5^n)/6^n

if they are equivalent then why don't they produce the same expected values as Bushindo pointed out.

I misread the equation. Yours was

6 − (1n + 2n + 3n + 4n + 5n)/6n.

But I misread it as

6 − (1n + 2n + 3n + 4n + 5n + 6n) /6n.

Mea culpa =)

-

There are kn ways in which n dice can each show k or less.

For the highest score to equal k, we must subtract those cases for which each die shows less than k; these number (k − 1)n.

So, k is the highest score in kn − (k − 1)n cases out of 6n.

In other words, pn(k), the probability that the highest individual score is k, is (kn − (k − 1)n)/6n.

The expected value, E(n), of the highest score is the sum, from k = 1 to 6, of k · pn(k).

Hence E(n) = [6(6n−5n) + 5(5n−4n) + 4(4n−3n) + 3(3n−2n) + 2(2n−1n) + 1(1n−0n)]/6n. = 6 − (1n + 2n + 3n + 4n + 5n)/6n.Nevermind, they are equivalent. See witzar's

Betting on red

in New Logic/Math Puzzles

Posted